SumGuy’s Guide to Linux Log Analysis

Linux log files can be a treasure trove of information, but deciphering them can often feel like an arcane art. In this guide, we’ll unravel the mysteries of Linux logs, helping you to gain critical insights into system health, pinpoint issues, and identify potential security risks.

Understanding Core Log Locations

The foundation of effective log analysis is knowing where to look. Here are some critical Linux log file locations:

/var/log/syslogor/var/log/messages: This is often the default catch-all location for system-wide events, errors, and general messages./var/log/auth.log: Pertains specifically to authentication logs, including login attempts (both successful and failed), making it helpful for tracking potential intruders./var/log/kern.log: Holds messages directly related to the Linux kernel. This is where you’ll find hardware interactions, driver issues, and other low-level system occurrences./var/log/dmesg: Contains the kernel ring buffer. Often useful for troubleshooting boot processes and hardware-related errors.- Application-Specific Logs: Web servers (Apache, Nginx), databases (MySQL, PostgreSQL), and other applications typically produce their own dedicated log files. These often reside within

/var/logsubdirectories.

Essential Tools: grep and awk

When it comes to parsing and filtering log entries, these two command-line powerhouses are indispensable:

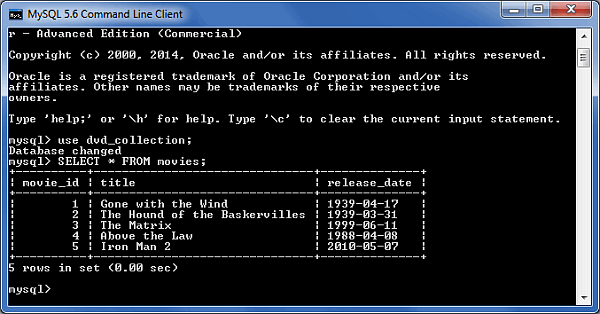

- grep: The go-to tool for basic text searches. Example:

grep "error" /var/log/syslogdisplays all lines containing the word “error” in your syslog. - awk: A more sophisticated tool that allows you to extract specific fields within log lines and perform simple calculations. Example:

awk '{print $4}' /var/log/auth.log | sort | uniq -cprovides a count of how many times each IP address appears in the authentication log.

Practical Applications

Let’s dive into some real-world use cases of log analysis:

- Spotting Errors: Filter by severity levels to swiftly reveal problems:

grep -i "error\|warn" /var/log/messages

- Security Audit: Investigate failed login attempts to identify potential attacks:

grep "Failed password" /var/log/auth.log

- Performance Monitoring: Detect trends in system load or disk usage:

awk '{print $1,$2,$NF}' /var/log/messages | grep "load average"- (This can isolate timestamps and load averages.)

- Detecting Port Scans: Look for patterns of connection attempts across many ports that may indicate a port-scanning attempt. This code extracts IP addresses from failed SSH login attempts in auth.log, counts, and sorts, showing the most frequent sources of failed attempts.

awk '{print $7}' /var/log/auth.log | grep -E 'sshd\[[0-9]+\]: Failed password' | sort | uniq -c | sort -n

- Spotting Suspicious User Activity: Identify potential abuse of privileges. This code identifies ‘sudo’ activity by users other than yourself, which could indicate unauthorized privilege escalation.

grep "sudo" /var/log/auth.log | grep -v "$(whoami)"

- Pinpointing Disk Space Issues: Uncover culprits hogging storage. This code Calculates the sizes of individual subdirectories within

/var/log, then sorts in human-readable format, making it simple to see which logs are the largest.du -sh /var/log/* | sort -h

- Identifying Website Errors (Apache): Investigate failed page loads, incorrect access attempts, etc. This Monitors the Apache error log in real-time (

tail -f), and highlights HTTP error codes (400-series and 500-series) in color.tail -f /var/log/apache2/error.log | grep -E --color '40[0-9]|50[0-9]'

- System Response Time Trends: Measure if responsiveness degrades at specific times. This Assumes you have ‘ping’ messages going to your logs – isolates date, time, and the round-trip ping time in milliseconds. Can chart this over time.

grep "ping statistics" /var/log/messages | awk '{print $1,$2,$7}'

- Web Server Bottlenecks: Discover if page load times are high on certain URLs. Shows a count of requests per IP address, the requested URL, and HTTP status code. Helps spot slow-loading pages and IP addresses associated with excessive requests.

awk '{print $1,$7,$9}' /var/log/apache2/access.log | sort | uniq -c | sort -nr

- Same as above but for Nginx:

awk '{print $1,$7,$9}' /var/log/nginx/access.log | sort | uniq -c | sort -nr

Pro Tips

- The timeframe is Key: Focus on a relevant time period surrounding a problem or event of interest.

- Regular Review: Make log analysis a proactive habit, rather than only reacting to problems.

- Centralized Logging: Consider a log management solution for easier log aggregation and analysis, especially when dealing with vast amounts of data across multiple servers.

Further Exploration

Log analysis is a vast and valuable domain. Here are some additional paths to explore:

- Regular Expressions: Harness the power of pattern matching for complex searches.

- Logrotate: Configure log rotation to prevent disk space exhaustion due to ever-growing log files.

- Advanced Analysis Tools: Look into tools like the ELK stack (Elasticsearch, Logstash, Kibana), powerful for visualization and complex queries.

Conclusion

By understanding where your logs reside, using the right tools, and knowing what to look for, you can turn these seemingly cryptic files into a powerful resource for managing and securing your Linux systems.