Docker Strategies for Load Balancing and Failover

Ensuring high availability and efficient load balancing are critical components of a robust Docker environment. By leveraging tools like Docker Swarm, Nginx, HAProxy, and Keepalived, developers can create resilient systems capable of handling increased traffic and potential failures gracefully. This article will explore these tools and techniques, guiding you through their setup and integration for optimizing your Docker deployments.

Understanding the Basics: Docker Swarm

Docker Swarm is a native clustering tool for Docker that turns a group of Docker engines into a single, virtual Docker engine. With Swarm, you can manage a cluster of Docker nodes as a single virtual system, providing the foundation for scalability and high availability.

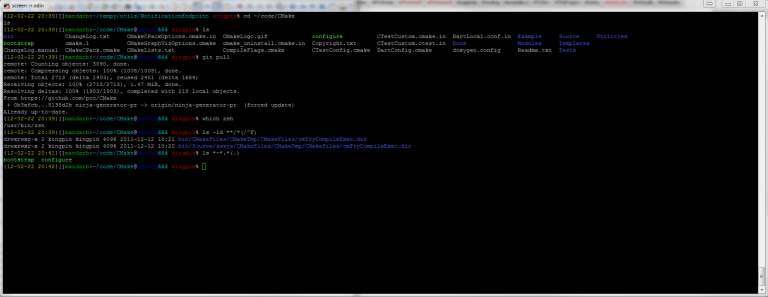

Setting Up Docker Swarm

- Initialize a Swarm: The first step is to initialize a swarm environment, which involves running the

docker swarm initcommand on one of your Docker engines. This machine will act as the manager node.docker swarm init - Join Nodes to the Swarm: Other Docker machines can join the swarm as workers. Use the command provided by the initialization output of your manager node:

docker swarm join --token <TOKEN> <MANAGER_IP>:2377 - Create Services: Once your swarm is setup, you can deploy services to it. The services can be configured to have multiple replicas, which Docker will spread across the nodes.

docker service create --name my_web --replicas 3 -p 80:80 nginxLoad Balancing with Nginx

Nginx is a powerful tool that can serve as a reverse proxy and load balancer in a Docker environment. It can distribute client requests or network load efficiently across multiple servers.

Configuring Nginx for Load Balancing

- Dockerize Nginx: Start by creating a custom Nginx Docker container that includes your load balancing configuration:

FROM nginx:latest COPY nginx.conf /etc/nginx/nginx.conf - Setup Nginx Config: Modify the

nginx.confto define how load is distributed. For instance:

http {

upstream backend {

server web1.example.com;

server web2.example.com;

}

server {

listen 80;

location / {

proxy_pass http://backend;

}

}

}- Deploy Nginx: Run your customized Nginx container on Docker, and it will start directing traffic to your multiple backend services.

High Availability with HAProxy

HAProxy offers high availability, load balancing, and proxying for TCP and HTTP-based applications. It is particularly well-suited for very high traffic web sites.

Integrating HAProxy in Docker

- HAProxy Configuration: Similar to Nginx, you can configure HAProxy to manage incoming traffic and distribute it or failover in case a node goes down.

global

log /dev/log local0

log /dev/log local1 notice

maxconn 4096

defaults

log global

timeout connect 5000ms

timeout client 50000ms

timeout server 50000ms

frontend http_front

bind *:80

default_backend http_back

backend http_back

balance roundrobin

server web1 web1.example.com:80 check

server web2 web2.example.com:80 check- Run HAProxy: Create a Dockerfile for HAProxy and deploy it similarly to the Nginx setup.

Keeping Services Alive: Keepalived

Keepalived primarily provides simple and robust facilities for load balancing and high availability to Linux systems and Linux-based infrastructures.

Using Keepalived with Docker

- Setup Keepalived: Configure Keepalived to monitor Docker services and take action if one fails. This often involves the VRRP protocol to achieve redundancy.

vrrp_instance VI_1 {

state MASTER

interface eth0

virtual_router_id 51

priority 100

virtual_ipaddress {

192.168.1.1

}

}- Deploy Keepalived: As with other services, Keepalived can be containerized and run across Docker nodes to monitor services and manage failover.

Conclusion

Implementing failover and load balancing in Docker environments is paramount for creating systems that are not only resilient and reliable but also scalable. Using Docker Swarm for orchestration, Nginx or HAProxy for load balancing, and Keepalived for failover ensures that your Dockerized applications can handle outages and fluctuations in network traffic while minimizing downtime. With proper setup and integration of these powerful tools, your Docker environments can achieve a level of robustness required by today’s demanding digital landscapes.